集群规划和基础参数设定

AllinOne部署方式已经在之前文章玩过,AllinOne部署好之后可以后续加节点。本实验是手动部署。

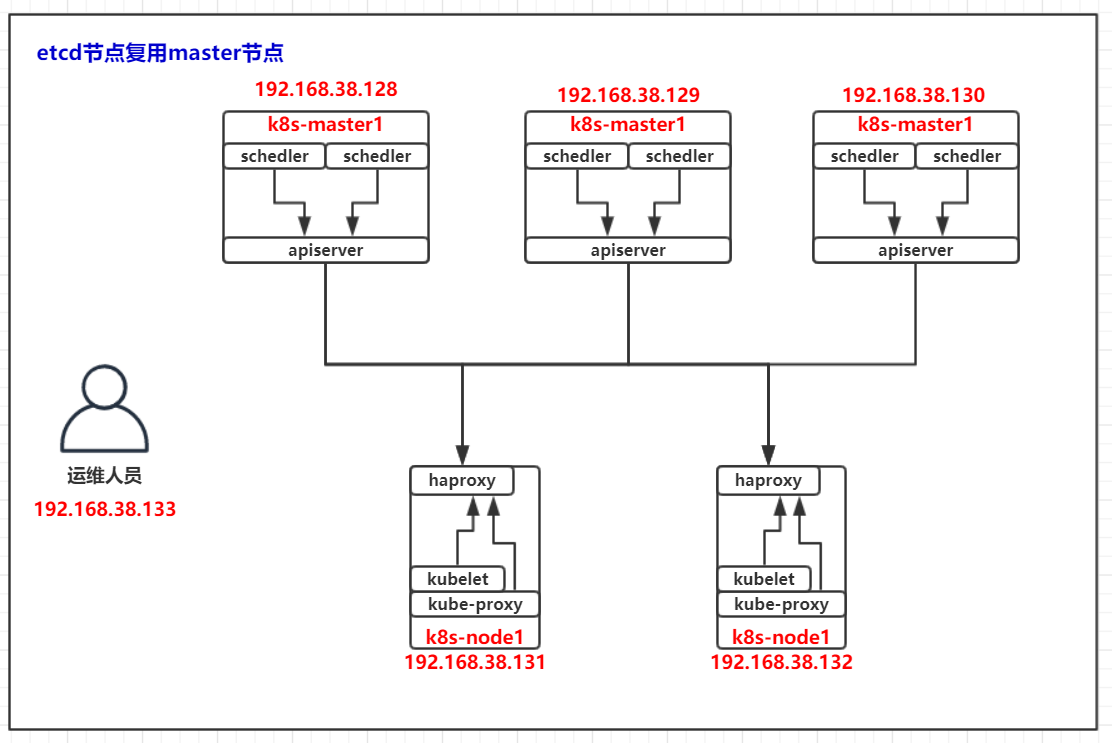

一、架构

节点信息

| 主机名 | IP | 操作系统 | 服务器配置 | 用途 |

|---|---|---|---|---|

| k8s-master1 | 192.168.200.128 | CentOS7.6.1810 | 4C4G | master节点,etcd节点 |

| k8s-master2 | 192.168.200.129 | CentOS7.6.1810 | 4C2G | master节点,etcd节点 |

| k8s-master3 | 192.168.200.130 | CentOS7.6.1810 | 4C2G | master节点,etcd节点 |

| k8s-node1 | 192.168.200.131 | CentOS7.6.1810 | 4C2G | worker节点 |

| k8s-node2 | 192.168.200.132 | CentOS7.6.1810 | 4C2G | worker节点 |

| node1 | 192.168.200.133 | CentOS7.6.1810 | 4C2G | 运维工程师电脑 |

二、三个注意事项

- 注意1: 请确保各节点时区设置一致、时间同步。参考:配置chrony时间同步

- 注意2: 在公有云上创建多主集群,请结合阅读在公有云上部署 kubeasz

- 注意3: 建议操作系统升级到新的稳定内核,请结合阅读内核升级文档

三、基础环境配置

- 配置最好2C2G及以上

- 系统为最小化CentOS7.6.1810

- 配置基础网络、更新源、SSH登录等。参考:CentOS7系统初始化脚本

四、在ansible控制端安装及准备ansible

4.1 pip安装ansible

pip加速配置参考:https://blog.csdn.net/qq_30633777/article/details/84205709 配置后不用每次都输入-i http://xxx

[root@ansible ~]# yum install git python-pip -y

[root@ansible ~]# pip install pip --upgrade -i https://mirrors.aliyun.com/pypi/simple/

[root@ansible ~]# pip install ansible==2.6.18 netaddr==0.7.19 -i https://mirrors.aliyun.com/pypi/simple/

4.2 hosts解析配置

[root@ansible ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.200.128 k8s-master1

192.168.200.129 k8s-master2

192.168.200.130 k8s-master3

192.168.200.131 k8s-node1

192.168.200.132 k8s-node2

192.168.200.133 node1 client ansible

4.2 ansible控制端配置免密码登录

[root@ansible ~]# ssh-keygen -t rsa -b 2048 -N '' -f ~/.ssh/id_rsa

[root@ansible ~]# for i in k8s-master{1..3};do ssh-copy-id $i;done

[root@ansible ~]# for i in k8s-node{1..2};do ssh-copy-id $i;done

[root@ansible ~]# ssh-copy-id ansible

五、在ansible控制端编排k8s安装

5.0、下载项目源码

5.1、 下载二进制文件

5.2、 下载离线docker镜像

这里直接使用 easzup 脚本下载 5.0/5.1/5.2 所需文件;运行成功后,所有文件(kubeasz代码、二进制、离线镜像)均已整理好放入目录/etc/ansilbe

#当前最新版本是2.1.0

[root@ansible ~]# export release=2.1.0

[root@ansible ~]# curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/easzup

[root@ansible ~]# chmod +x ./easzup

# 使用工具脚本下载

[root@ansible ~]# ./easzup -D

输出内容有点长,放这里了:https://github.com/imooc-courses/cobbler/issues/3#issue-538985884

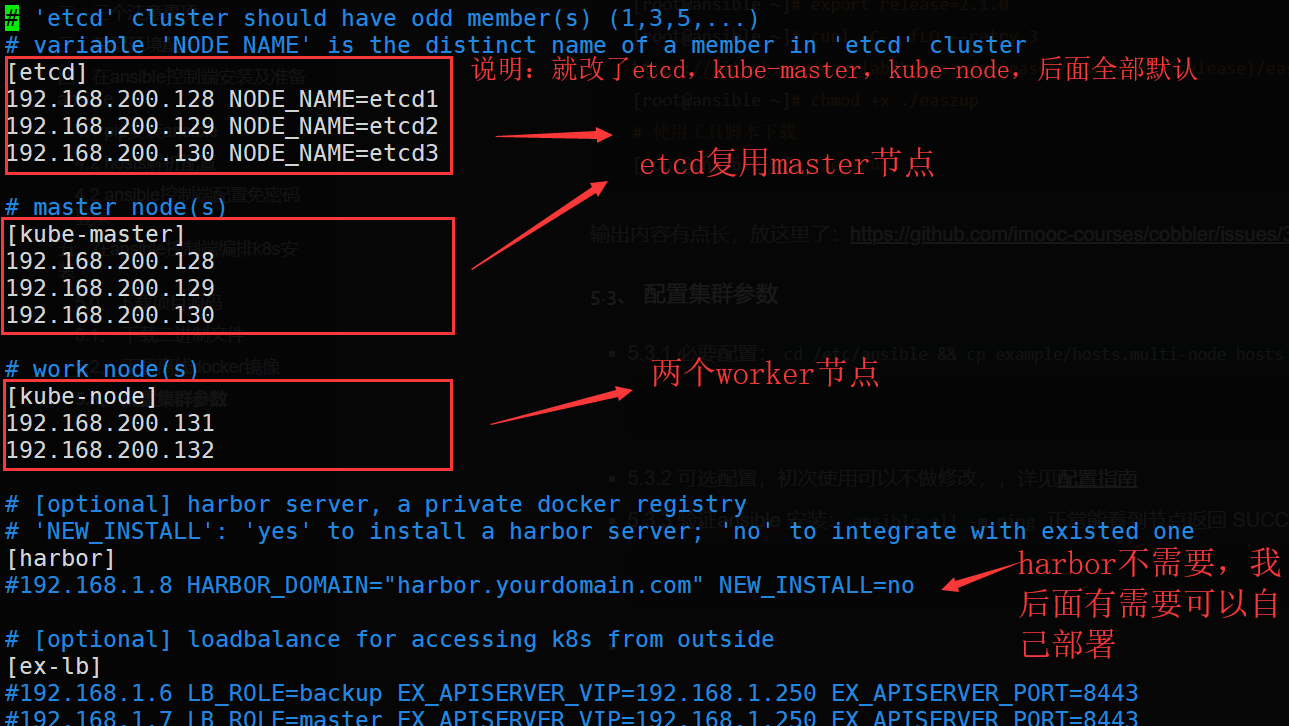

5.3、 配置集群参数

-

5.3.1 必要配置:

cd /etc/ansible && cp example/hosts.multi-node hosts, 然后实际情况修改此hosts文件

-

5.3.2 可选配置,初次使用可以不做修改,,详见配置指南

-

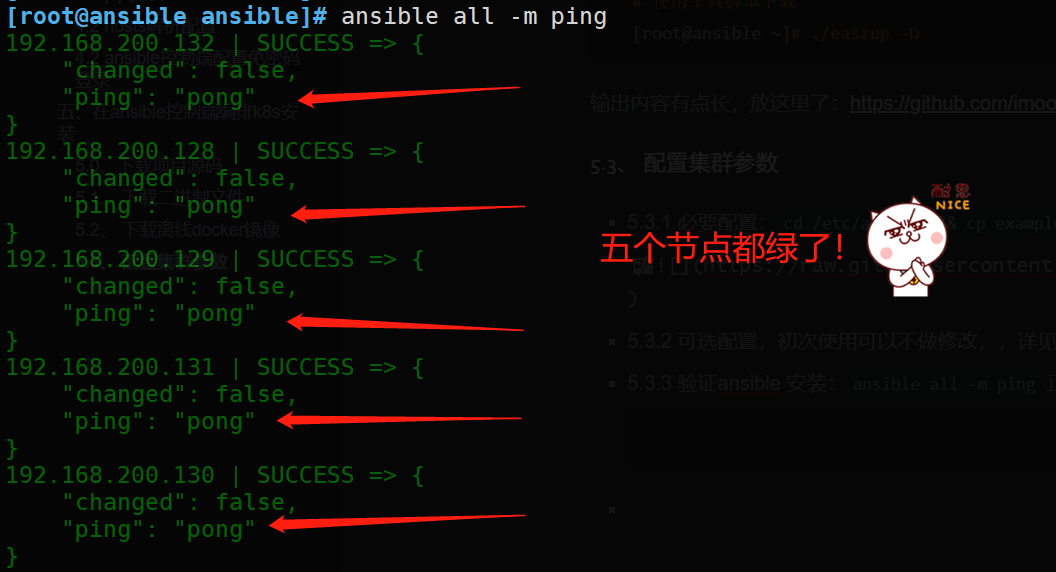

5.3.3 验证ansible 安装:

ansible all -m ping正常能看到节点返回 SUCCESS

5.4、开始安装

分步安装

5.4.1 创建证书和环境配置

详情参考:https://github.com/easzlab/kubeasz/blob/master/docs/setup/01-CA_and_prerequisite.md

[root@ansible ansible]# ansible-playbook 01.prepare.yml

5.4.2 安装etcd集群

详情参考:https://github.com/easzlab/kubeasz/blob/master/docs/setup/02-install_etcd.md

[root@ansible ansible]# ansible-playbook 02.etcd.yml

验证集群状态:

# 查看服务状态

[root@k8s-master1 ~]# systemctl status etcd

● etcd.service - Etcd Server

Loaded: loaded (/etc/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: active (running) since Tue 2019-12-17 19:48:01 CST; 27min ago

Docs: https://github.com/coreos

Main PID: 11413 (etcd)

CGroup: /system.slice/etcd.service

└─11413 /opt/kube/bin/etcd --name=etcd1 --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem --peer-...

# 查看运行日志

[root@k8s-master1 ~]# journalctl -u etcd

-- Logs begin at Tue 2019-12-17 23:04:34 CST, end at Tue 2019-12-17 20:17:09 CST. --

Dec 17 19:48:00 k8s-master1 systemd[1]: Starting Etcd Server...

Dec 17 19:48:00 k8s-master1 etcd[11413]: etcd Version: 3.3.15

Dec 17 19:48:00 k8s-master1 etcd[11413]: Git SHA: 94745a4ee

Dec 17 19:48:00 k8s-master1 etcd[11413]: Go Version: go1.12.9

Dec 17 19:48:00 k8s-master1 etcd[11413]: Go OS/Arch: linux/amd64

Dec 17 19:48:00 k8s-master1 etcd[11413]: setting maximum number of CPUs to 4, total number of available CPUs is 4

Dec 17 19:48:00 k8s-master1 etcd[11413]: peerTLS: cert = /etc/etcd/ssl/etcd.pem, key = /etc/etcd/ssl/etcd-key.pem, ca = , trusted-ca =

Dec 17 19:48:00 k8s-master1 etcd[11413]: listening for peers on https://192.168.200.128:2380

Dec 17 19:48:00 k8s-master1 etcd[11413]: The scheme of client url http://127.0.0.1:2379 is HTTP while peer key/cert files are presente

Dec 17 19:48:00 k8s-master1 etcd[11413]: listening for client requests on 127.0.0.1:2379

Dec 17 19:48:00 k8s-master1 etcd[11413]: listening for client requests on 192.168.200.128:2379

Dec 17 19:48:00 k8s-master1 etcd[11413]: name = etcd1

Dec 17 19:48:00 k8s-master1 etcd[11413]: data dir = /var/lib/etcd

Dec 17 19:48:00 k8s-master1 etcd[11413]: member dir = /var/lib/etcd/member

Dec 17 19:48:00 k8s-master1 etcd[11413]: heartbeat = 100ms

Dec 17 19:48:00 k8s-master1 etcd[11413]: election = 1000ms

Dec 17 19:48:00 k8s-master1 etcd[11413]: snapshot count = 100000

Dec 17 19:48:00 k8s-master1 etcd[11413]: advertise client URLs = https://192.168.200.128:2379

Dec 17 19:48:00 k8s-master1 etcd[11413]: initial advertise peer URLs = https://192.168.200.128:2380

Dec 17 19:48:00 k8s-master1 etcd[11413]: initial cluster = etcd1=https://192.168.200.128:2380,etcd2=https://192.168.200.129:2380,etcd3

Dec 17 19:48:00 k8s-master1 etcd[11413]: starting member 4cfc9b449d51672f in cluster aa87c8b8196317d7

Dec 17 19:48:00 k8s-master1 etcd[11413]: 4cfc9b449d51672f became follower at term 0

Dec 17 19:48:00 k8s-master1 etcd[11413]: newRaft 4cfc9b449d51672f [peers: [], term: 0, commit: 0, applied: 0, lastindex: 0, lastterm:

Dec 17 19:48:00 k8s-master1 etcd[11413]: 4cfc9b449d51672f became follower at term 1

Dec 17 19:48:00 k8s-master1 etcd[11413]: simple token is not cryptographically signed

Dec 17 19:48:00 k8s-master1 etcd[11413]: starting peer 232defbbe6f9dda5...

# 在任意master节点执行,三个healthy表示正常

[root@k8s-master1 ~]# export NODE_IPS="192.168.200.128 192.168.200.129 192.168.200.130"

[root@k8s-master1 ~]# for ip in ${NODE_IPS}; do

ETCDCTL_API=3 etcdctl \

--endpoints=https://${ip}:2379 \

--cacert=/etc/kubernetes/ssl/ca.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem \

endpoint health; done

https://192.168.200.128:2379 is healthy: successfully committed proposal: took = 4.634889ms

https://192.168.200.129:2379 is healthy: successfully committed proposal: took = 6.477304ms

https://192.168.200.130:2379 is healthy: successfully committed proposal: took = 6.285599ms

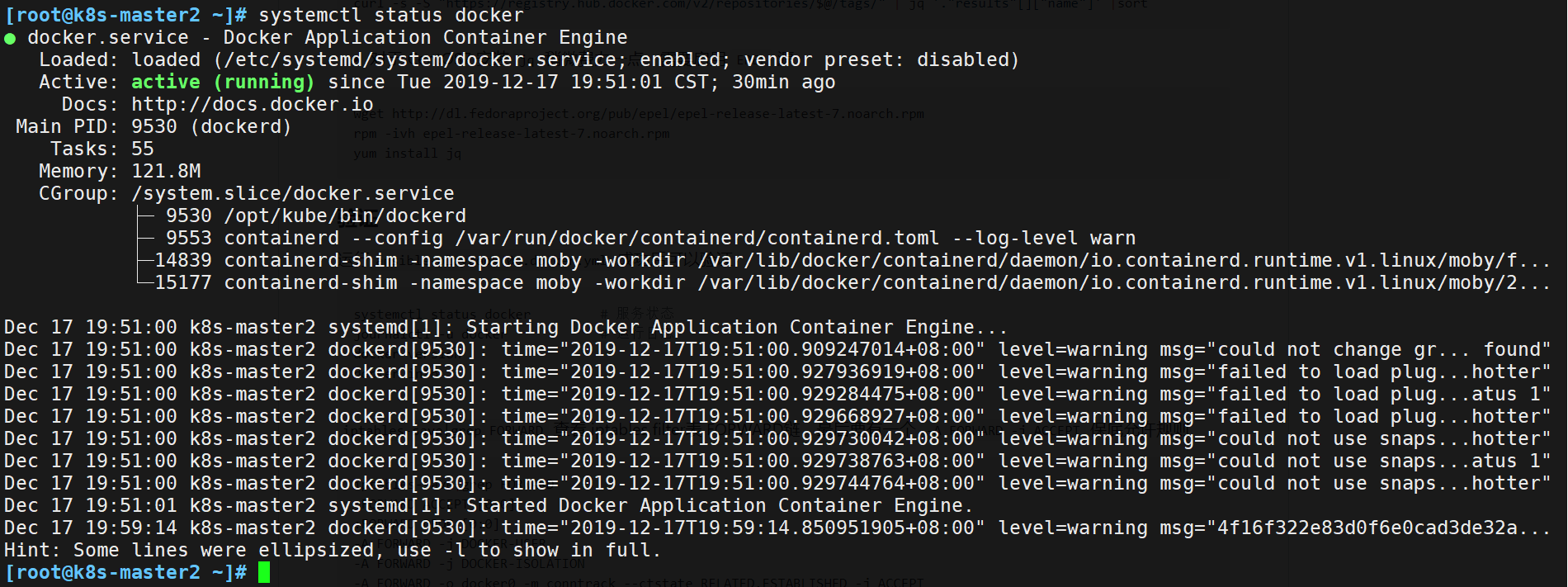

5.4.3 安装docker服务

详情参考:https://github.com/easzlab/kubeasz/blob/master/docs/setup/03-install_docker.md

[root@ansible ansible]# ansible-playbook 03.docker.yml

验证:

#docker服务状态

[root@k8s-master2 ~]# systemctl status docker

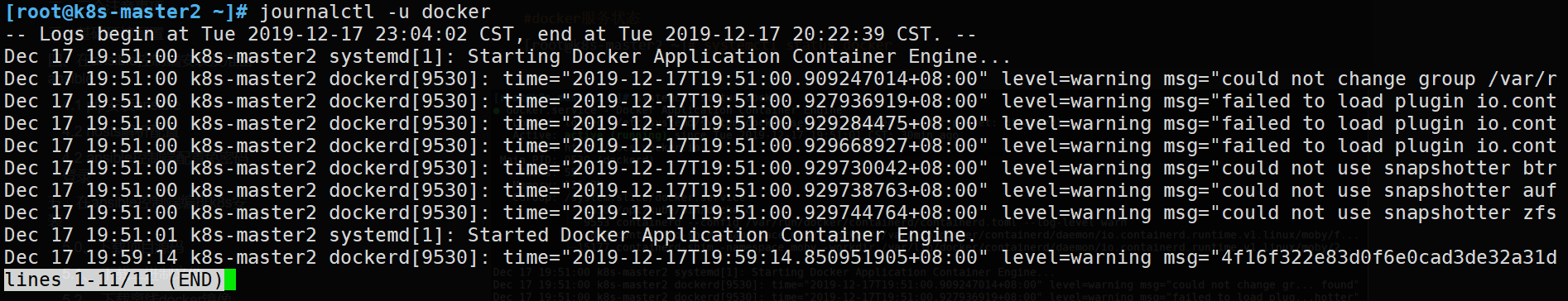

# 运行日志

[root@k8s-master2 ~]# journalctl -u docker

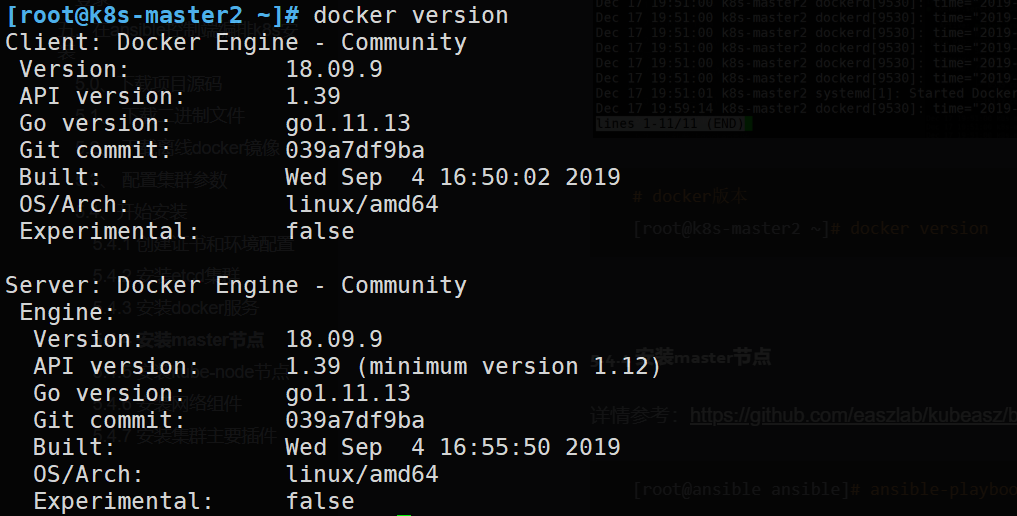

# docker版本

[root@k8s-master2 ~]# docker version

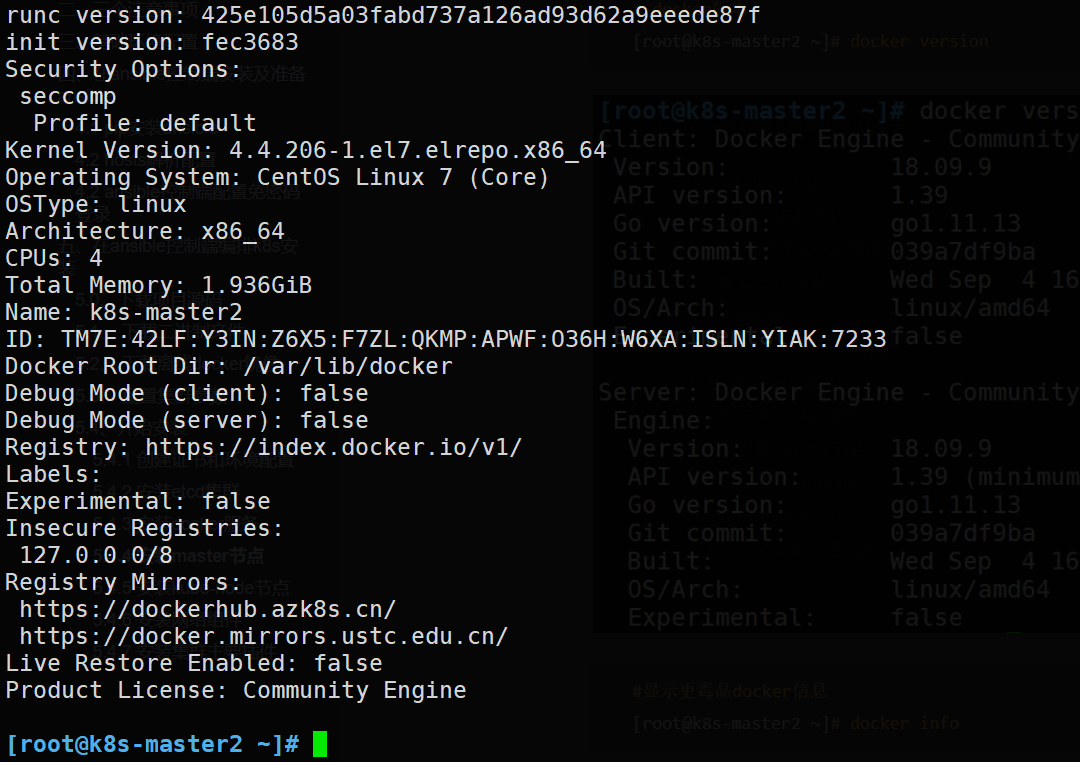

#显示更毒品docker信息

[root@k8s-master2 ~]# docker info

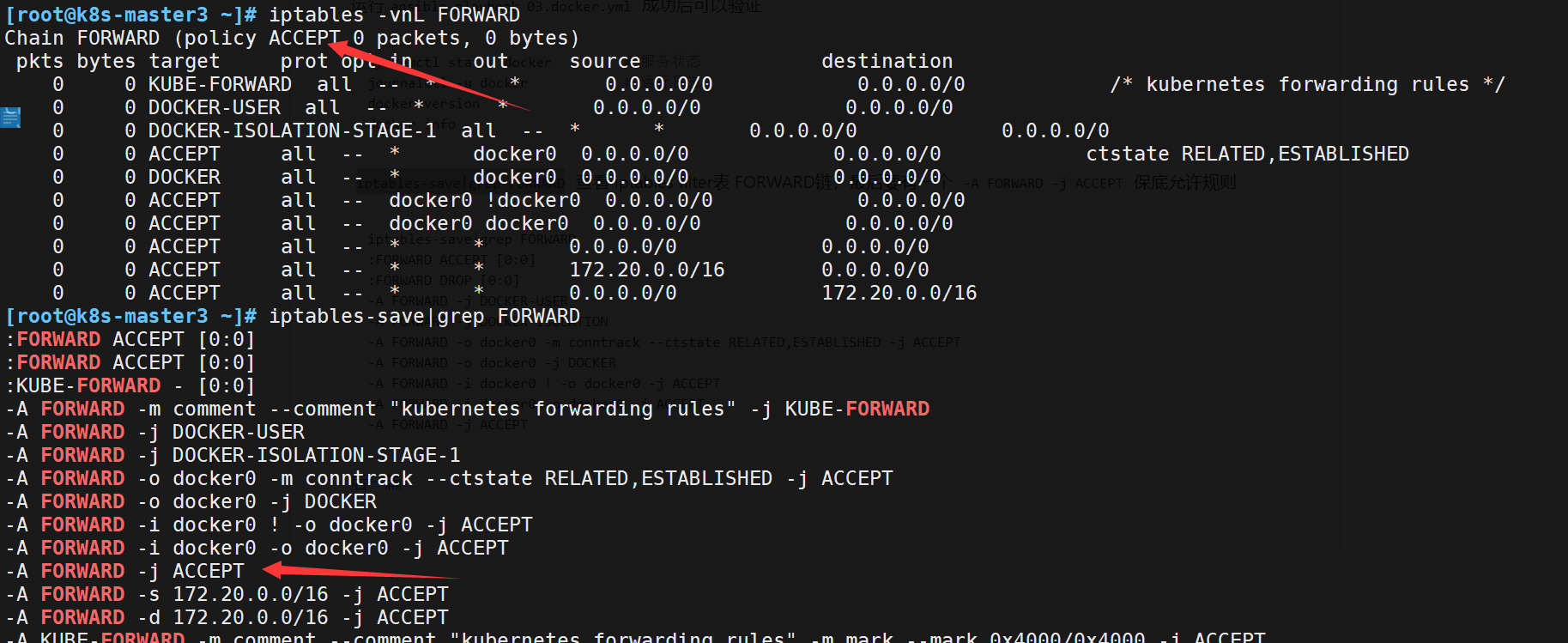

# 查看 iptables filter表 FORWARD链,最后要有一个 -A FORWARD -j ACCEPT 保底允许规则

[root@k8s-master3 ~]# iptables-save|grep FORWARD

5.4.4 安装master节点

详情参考:https://github.com/easzlab/kubeasz/blob/master/docs/setup/04-install_kube_master.md

[root@ansible ansible]# ansible-playbook 04.kube-master.yml

master集群验证

# 查看进程状态

systemctl status kube-apiserver

systemctl status kube-controller-manager

systemctl status kube-scheduler

# 查看进程运行日志

journalctl -u kube-apiserver

journalctl -u kube-controller-manager

journalctl -u kube-scheduler

# 执行 kubectl get componentstatus ...我这个貌似不对劲

[root@k8s-master1 ~]# kubectl get componentstatus -A

NAME AGE

controller-manager <unknown>

scheduler <unknown>

etcd-0 <unknown>

etcd-2 <unknown>

etcd-1 <unknown>

5.4.5 安装kube-node节点

详情参考:https://github.com/easzlab/kubeasz/blob/master/docs/setup/05-install_kube_node.md

[root@ansible ansible]# ansible-playbook 05.kube-node.yml

5.4.6 安装网络组件

详情参考:https://github.com/easzlab/kubeasz/blob/master/docs/setup/06-install_network_plugin.md

[root@ansible ansible]# ansible-playbook 06.network.yml

验证

[root@k8s-master1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.200.128 Ready,SchedulingDisabled master 38m v1.16.2

192.168.200.129 Ready,SchedulingDisabled master 38m v1.16.2

192.168.200.130 Ready,SchedulingDisabled master 38m v1.16.2

192.168.200.131 Ready node 35m v1.16.2

192.168.200.132 Ready node 35m v1.16.2

5.4.7 安装集群主要插件

详情参考:https://github.com/easzlab/kubeasz/blob/master/docs/setup/07-install_cluster_addon.md

[root@ansible ansible]# ansible-playbook 07.cluster-addon.yml

六、应用部署

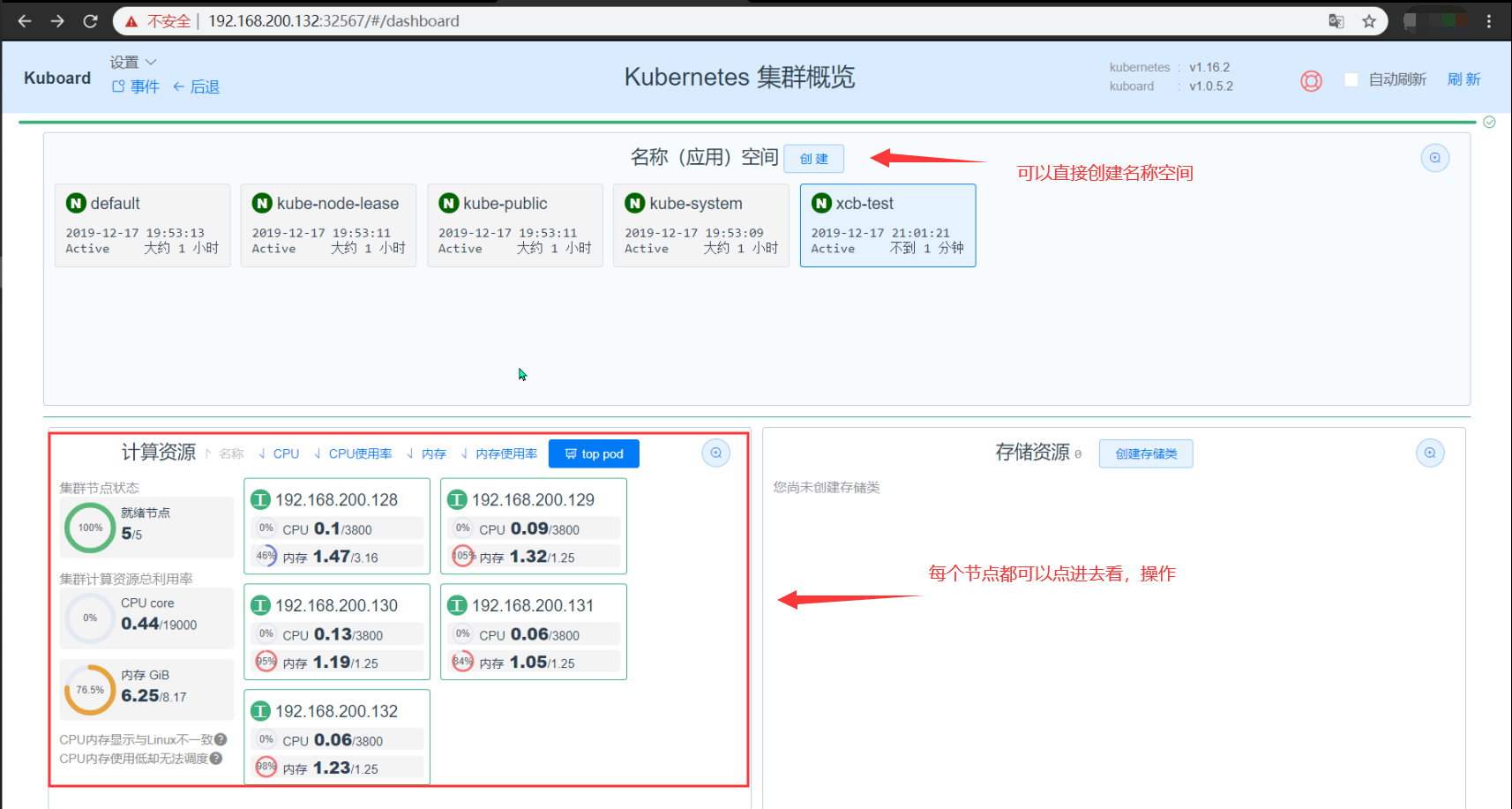

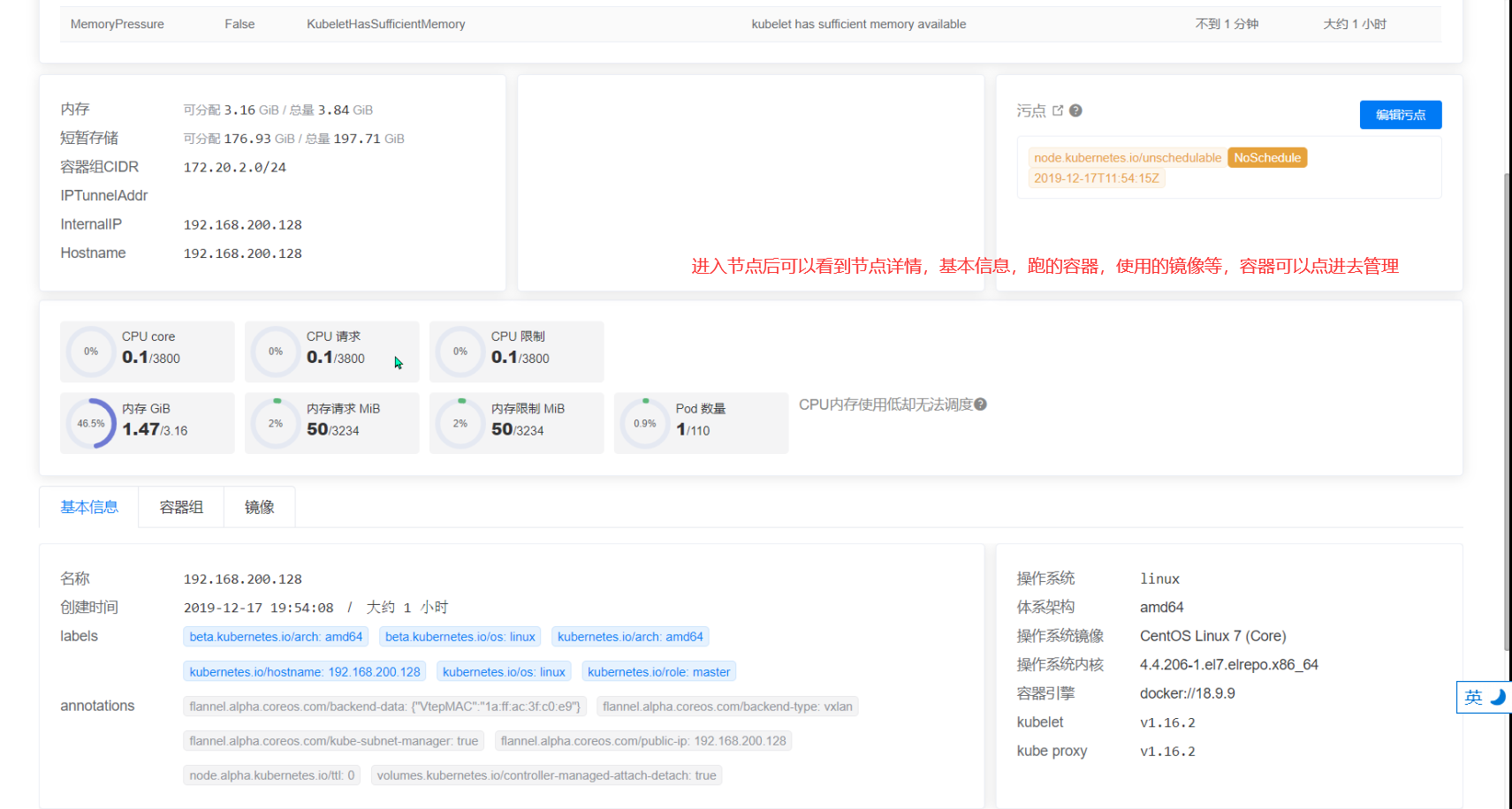

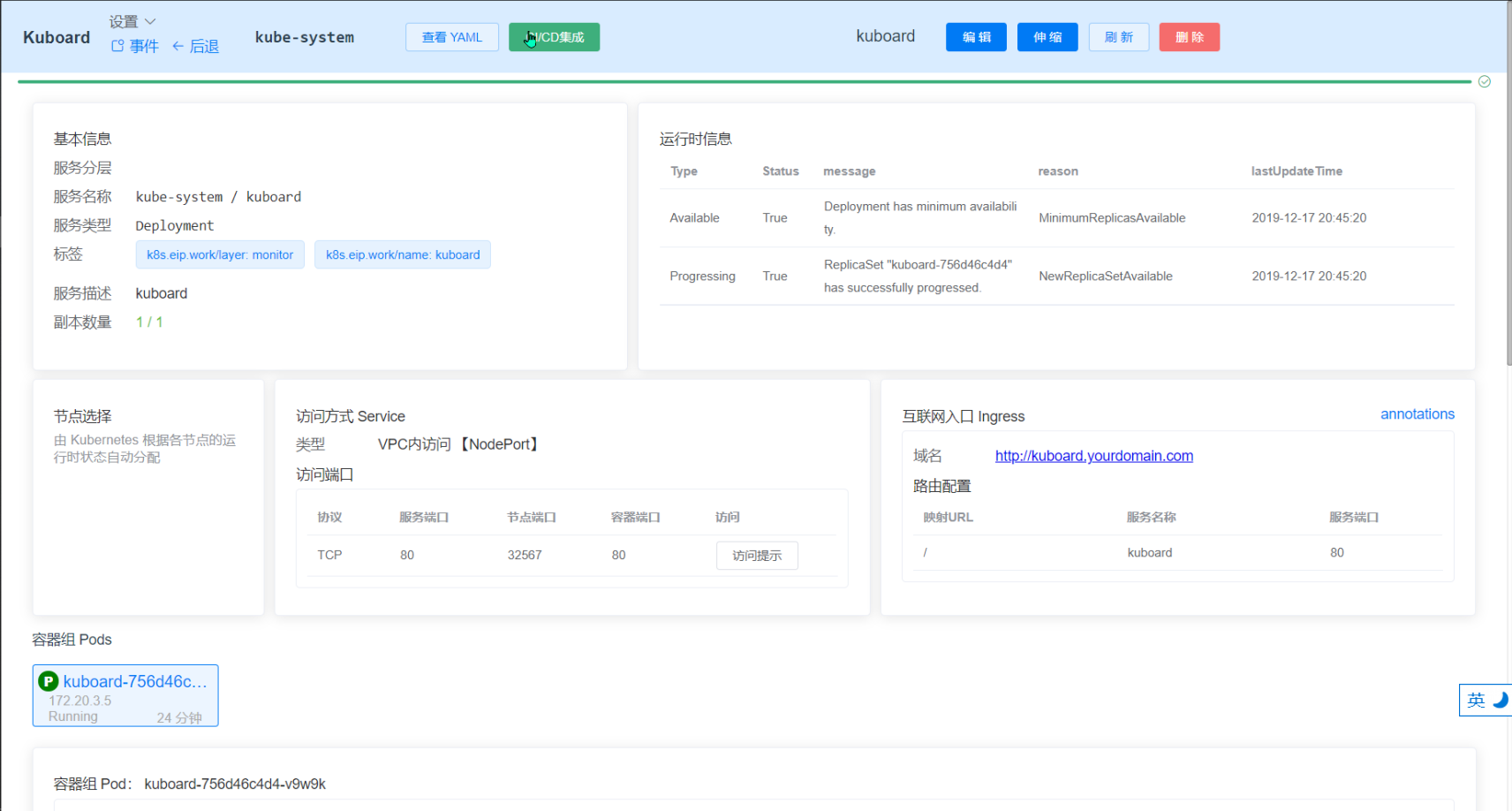

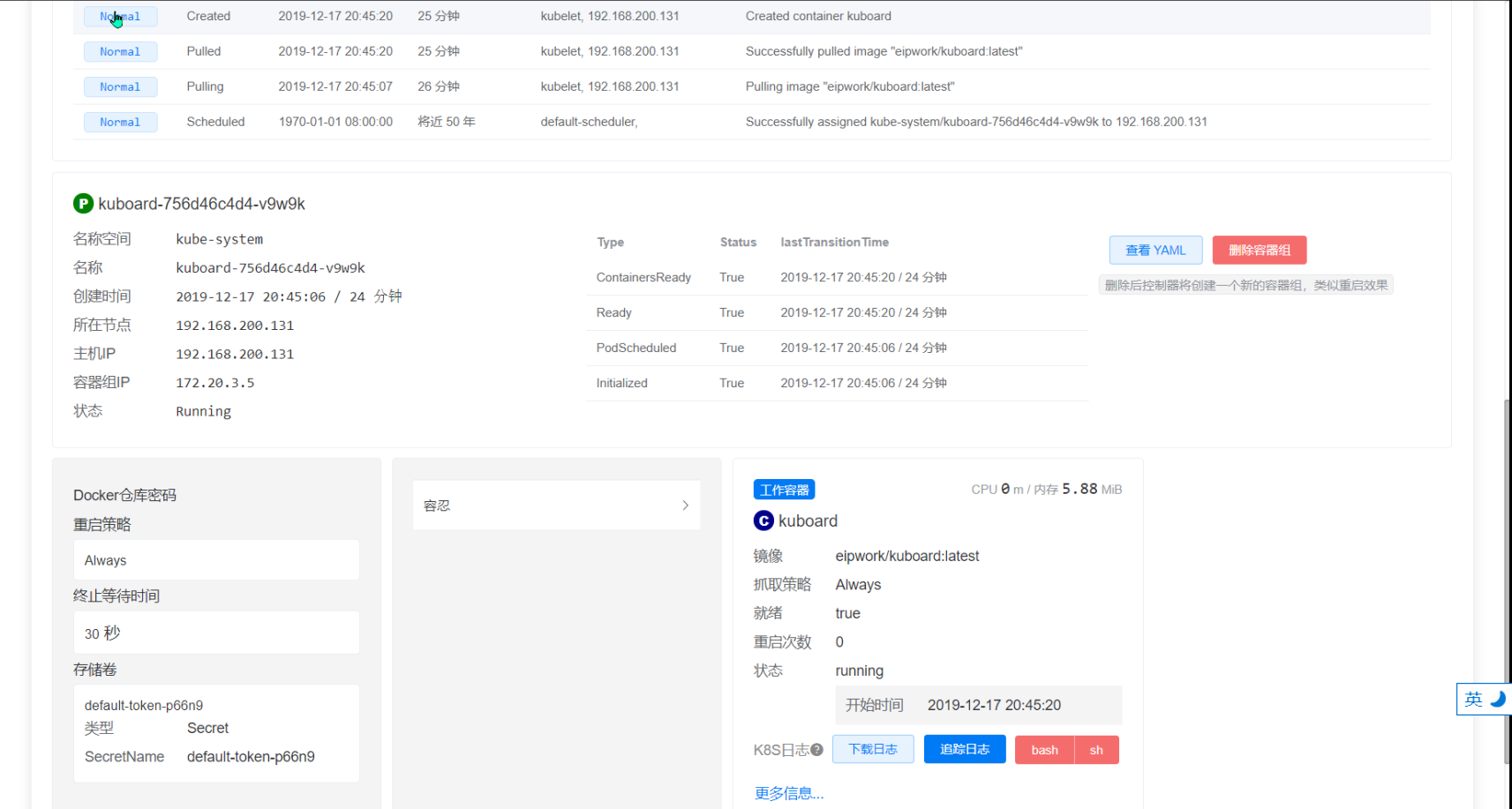

6.1 kuboard部署

参考:https://www.kuboard.cn/install/install-dashboard.html#%E5%9C%A8%E7%BA%BF%E4%BD%93%E9%AA%8C

#kubectl apply部署

[root@ansible ~]# kubectl apply -f https://kuboard.cn/install-script/kuboard.yaml

deployment.apps/kuboard created

service/kuboard created

serviceaccount/kuboard-user created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-user created

serviceaccount/kuboard-viewer created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-viewer created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-viewer-node created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-viewer-pvp created

ingress.extensions/kuboard created

#查看 Kuboard 运行状态:

[root@ansible ~]# kubectl get pods -l k8s.eip.work/name=kuboard -n kube-system

NAME READY STATUS RESTARTS AGE

kuboard-756d46c4d4-v9w9k 1/1 Running 0 86s

[root@ansible ~]# kubectl get svc kuboard -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kuboard NodePort 10.68.74.195 <none> 80:32567/TCP 90s

#获取管理员登陆token

[root@ansible ~]# kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}') -o go-template='{{.data.token}}' | base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6Ilg0YTdfazlUS3NRaXlMMmtYWVRWd2djNTg5WEliNGNPSXNnWkZPRVphWUUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJvYXJkLXVzZXItdG9rZW4tdmJ3cjgiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoia3Vib2FyZC11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiODRhMWJiYjQtZmFiZC00ZGJiLWFkNDItZjQwY2I4NGQ2N2JhIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmt1Ym9hcmQtdXNlciJ9.i3eCxfK3cAXOEKpWUZYjoaGRafg2VKa0bXvWHspOfiNuGlZTL2XAMlUeaDZs95ek4KD-B6zZwtfIEOQQ8zBERa2I9WP_hHyV_gT8FQCt9nRyiTVC5jBYKxSWFE5-38Oc-sjFlvGoHHVfSw9Kn45KWROln3QmhTdXEsLFJM0B1p2btCMkBsnEVWYkpjymffG_y7mvHg5PjhpCKtLRdFG_VC0zOvPktVPjiGxiiE8VqAcH36yVlcDXpdBy-p-0fvpKn4_pvOigjK81UZO5RnCCTt15iSaGYNytZeAOE3tlTtYU7d1aLIHcm58Mt5iRlCt1rWP7wjguXwL0tqHOerz7fQ

#获取只读token

[root@ansible ~]# kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-viewer | awk '{print $1}') -o go-template='{{.data.token}}' | base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6Ilg0YTdfazlUS3NRaXlMMmtYWVRWd2djNTg5WEliNGNPSXNnWkZPRVphWUUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJvYXJkLXZpZXdlci10b2tlbi1sMjRxYiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJvYXJkLXZpZXdlciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjgyMTlkMzZiLWNmY2MtNDA1Ny1hZjFiLTc0Nzg2OTBlNTdmMCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJvYXJkLXZpZXdlciJ9.p1Ko31YKFJpRtoD0JuR_QSvsiIo46sF5Jqde2KYp0a1Ic0sBnG57GH9r-l2FADbnbpKVYK4_BP22NkHFlRY6QJod2bSs5XJ9fnvkeofIKVwqWOEJQ1YDOabzl6F4zt-kThvR-EnAUYadJN_gLOZ3-wOppA7PqmmHw2iNZPZvithhd7sJ1tqNXBC_Hjm2-CzEls0zF-LGNxVhGTq4tZ8Bh2cduOQRj1VEGlDtqgB9LeZyBSkNeL5gUi2vje7YD4U1ALvhxM-GqObLx5aJZW7R26ARGoTXUd8tWeTCUkpC_jYGe5bF7hg6ukn5U_qkerd2tFClQwnDTsSIW-T1HwS-2g

使用上面的token访问kuboard

Kuboard Service 使用了 NodePort 的方式暴露服务,NodePort 为 32567;您可以按如下方式访问 Kuboard。

http://任意一个Worker节点的IP地址:32567/

删除kuboard

[root@ansible ~]# kubectl delete -f https://kuboard.cn/install-script/kuboard.yaml

deployment.apps "kuboard" deleted

service "kuboard" deleted

serviceaccount "kuboard-user" deleted

clusterrolebinding.rbac.authorization.k8s.io "kuboard-user" deleted

serviceaccount "kuboard-viewer" deleted

clusterrolebinding.rbac.authorization.k8s.io "kuboard-viewer" deleted

clusterrolebinding.rbac.authorization.k8s.io "kuboard-viewer-node" deleted

clusterrolebinding.rbac.authorization.k8s.io "kuboard-viewer-pvp" deleted

ingress.extensions "kuboard" deleted

七、部署好的集群简单测试

查看集群节点:

[root@k8s-master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.200.128 Ready,SchedulingDisabled master 130m v1.16.2

192.168.200.129 Ready,SchedulingDisabled master 130m v1.16.2

192.168.200.130 Ready,SchedulingDisabled master 130m v1.16.2

192.168.200.131 Ready node 127m v1.16.2

192.168.200.132 Ready node 127m v1.16.2

查看集群信息

[root@k8s-master1 ~]# kubectl cluster-info

Kubernetes master is running at https://192.168.200.128:6443

CoreDNS is running at https://192.168.200.128:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Metrics-server is running at https://192.168.200.128:6443/api/v1/namespaces/kube-system/services/https:metrics-server:/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.