EFK教程三:ElasticSearch多实例部署

用途:

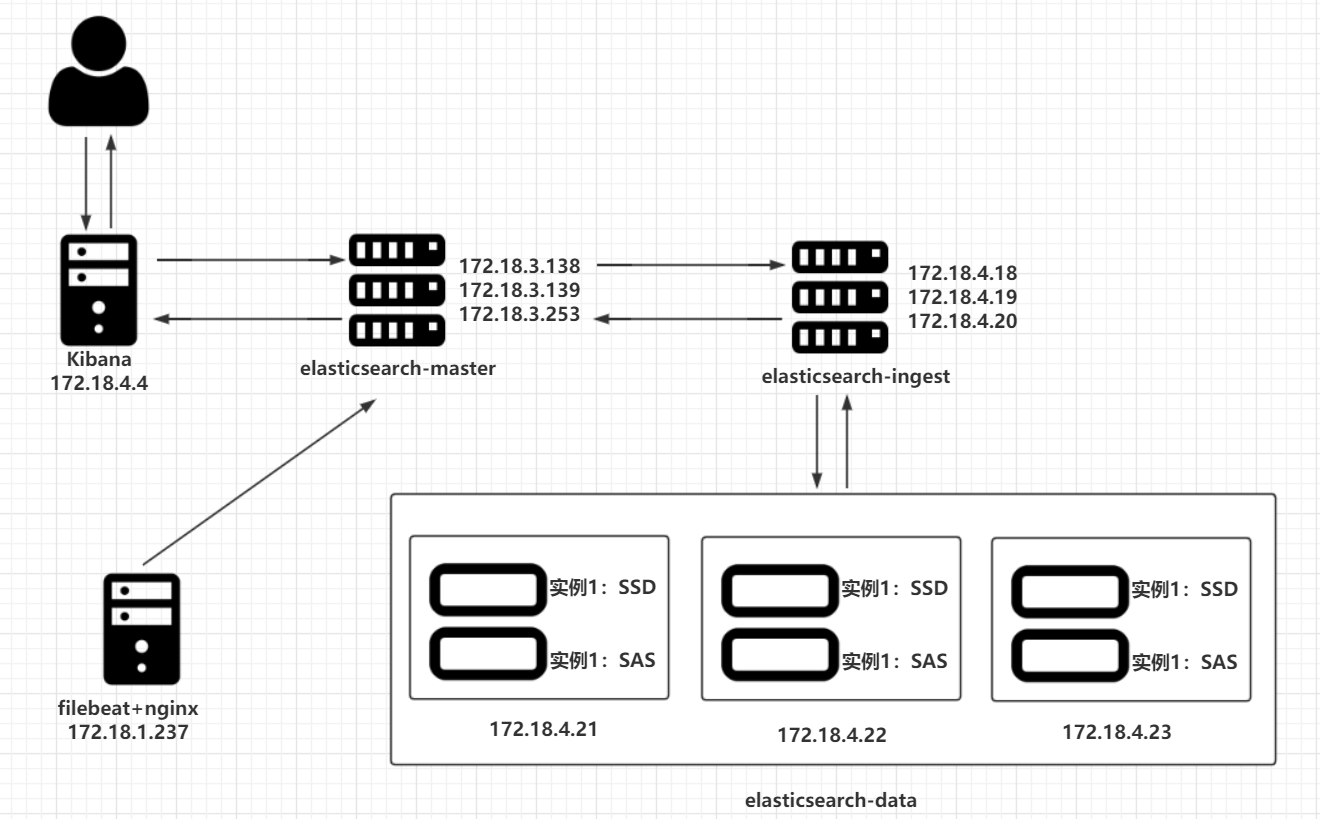

▷ 在第一篇《EFK教程 - 快速入门指南》中,阐述了EFK的安装部署,其中ES的架构为三节点,即master、ingest、data角色同时部署在三台服务器上。

▷ 在第二篇《EFK教程 - ElasticSearch高性能高可用架构》中,阐述了EFK的data/ingest/master角色的用途及分别部署三节点,在实现性能最大化的同时保障高可用

前两篇文章,ES集群中只存在一个实例,而在本文中,将在一个集群中部署多个ES实例,来实现资源合理分配。例如data服务器存在SSD与SAS硬盘,可以将热数据存放到SSD,而冷数据存放到SAS,实现数据冷热分离。

▷ 服务器配置:

| 名称 | IP | CPU | 内存 | SAS硬盘 | SSD硬盘 | 访问端口 | 运输端口 |

|---|---|---|---|---|---|---|---|

| filebeat | 172.18.1.237 | 2 | 2G | / | / | ||

| kibana | 172.18.4.4 | 2 | 2G | / | / | 5601 | / |

| elasticsearch-master-1 | 172.18.3.138 | 4 | 4G | / | / | 9200 | 9300 |

| elasticsearch-master-2 | 172.18.3.139 | 4 | 4G | / | / | 9200 | 9300 |

| elasticsearch-master-3 | 172.18.3.253 | 4 | 4G | / | / | 9200 | 9300 |

| elasticsearch-ingest-1 | 172.18.4.18 | 2 | 1G | / | / | 9200 | 9300 |

| elasticsearch-ingest-2 | 172.18.4.19 | 2 | 1G | / | / | 9200 | 9300 |

| elasticsearch-ingest-3 | 172.18.4.20 | 2 | 1G | / | / | 9200 | 9300 |

| elasticsearch-data-1-SAS | 172.18.4.21 | 1 | 1G | 10G | / | 9200 | 9300 |

| elasticsearch-data1-SSD | 172.18.4.21 | 1 | 1G | / | 10G | 9201 | 9301 |

| elasticsearch-data-2-SAS | 172.18.4.22 | 1 | 1G | 10G | / | 9200 | 9300 |

| elasticsearch-data-2-SSD | 172.18.4.22 | 1 | 1G | / | 10G | 9201 | 9301 |

| elasticsearch-data-3-SAS | 172.18.4.23 | 1 | 1G | 10G | / | 9200 | 9300 |

| elasticsearch-data-3-SSD | 172.18.4.23 | 1 | 1G | / | 10G | 9201 | 9301 |

▷ 架构图

一、先启动各节点的服务

#master节点 共9个节点

sudo -u elasticsearch /opt/elasticsearch/bin/elasticsearch

#nginx节点

systemctl start nginx

查看各节点的健康状态

qqq@qqq:~$ curl http://172.18.3.138:9200/_cat/health?v

epoch timestamp cluster status node.total node.data shards pri relo init unassign pending_tasks max_task_wait_time active_shards_percent

1574541947 20:45:47 007-project green 9 3 10 5 0 0 0 0 - 100.0%

查看集群节点列表

qqq@qqq:~$ curl http://172.18.3.138:9200/_cat/nodes?v

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

172.18.4.18 28 93 0 0.03 0.20 0.16 i - es-ingest1

172.18.4.21 10 94 1 0.48 0.73 0.37 d - es-data1

172.18.4.20 27 94 0 0.02 0.22 0.17 i - es-ingest3

172.18.3.139 12 48 0 0.05 0.16 0.13 m - es-2

172.18.4.22 10 93 1 0.45 0.69 0.35 d - es-data2

172.18.4.23 10 94 1 0.38 0.59 0.30 d - es-data3

172.18.4.19 28 93 0 0.03 0.27 0.20 i - es-ingest2

172.18.3.138 18 78 0 0.00 0.08 0.10 m - es-1

172.18.3.253 13 47 0 0.11 0.08 0.06 m * es-3

二、es-data1部署双实例

1️⃣迁移索引:

确保索引不在当前实例上

#当前索引分布在es-data1,es-data2,es-data3上

qqq@qqq:~$ curl "http://172.18.3.138:9200/_cat/shards?h=n"

es-data3

es-data2

es-data2

es-data1

es-data3

es-data1

es-data3

es-data2

es-data2

es-data1

#迁移索引到其他两个节点

qqq@qqq:~$ curl -X PUT "http://172.18.3.138:9200/*/_settings?pretty" -H 'Content-Type: application/json' -d'

> {

> "index.routing.allocation.include._ip": "172.18.4.22,172.18.4.23"

> }'

{

"acknowledged" : true

}

#确认现在索引在es-data2核es-data3上

qqq@qqq:~$ curl "http://172.18.3.138:9200/_cat/shards?h=n"

es-data3

es-data2

es-data3

es-data2

es-data3

es-data2

es-data3

es-data2

es-data3

es-data2

2️⃣部署es-data1双实例

条件有限,没有挂载新的硬盘用,创建目录代替

| 代码目录 | 数据目录 | 访问端口 | 运输端口 |

|---|---|---|---|

| /opt/elasticsearch-SAS | /data/SAS | 9200 | 9300 |

| /opt/elasticsearch-SSD | /data/SSD | 9201 | 9301 |

#改原来的实例

[root@es-data1 opt]# pkill elasticsearch

[root@es-data1 opt]# mv elasticsearch elasticsearch-SAS

[root@es-data1 opt]# mkdir -p /opt/logs/elasticsearch-SAS

[root@es-data1 ~]# chown elasticsearch.elasticsearch /opt/logs/elasticsearch-SAS -R

#SAS实例/opt/elasticsearch-SAS/config/elasticsearch.yml配置

[root@es-data1 opt]# grep -Ev '^$|^#' /opt/elasticsearch-SAS/config/elasticsearch.yml

cluster.name: 007-project

node.name: es-data1-SAS

path.data: /data/SAS

path.logs: /opt/logs/elasticsearch-SAS

network.host: 172.18.4.21

http.port: 9200

transport.port: 9300

#端口一定要加

discovery.seed_hosts: ["es-1:9300", "es-2:9300", "es-3:9300"]

cluster.initial_master_nodes: ["es-1:9300", "es-2:9300","es-3:9300"]

http.cors.enabled: true

http.cors.allow-origin: "*"

node.master: false

node.ingest: false

node.data: true

# 本机只允行启2个实例

node.max_local_storage_nodes: 2

#SSD实例

[root@es-data1 opt]# tar xf software/elasticsearch-7.3.2-linux-x86_64.tar.gz -C /opt/

[root@es-data1 opt]# mv elasticsearch-7.3.2 elasticsearch-SSD

[root@es-data1 opt]# mkdir /data/SSD /opt/logs/elasticsearch-SSD

[root@es-data1 ~]# chown elasticsearch.elasticsearch /data/SSD /opt/logs/elasticsearch-SSD /opt/elasticsearch-SSD -R

#SSD实例/opt/elasticsearch-SSD/config/elasticsearch.yml配置

[root@es-data1 opt]# grep -Ev '^$|^#' /opt/elasticsearch-SSD/config/elasticsearch.yml

cluster.name: 007-project

node.name: es-data1-SSD

path.data: /data/SSD

path.logs: /opt/logs/elasticsearch-SSD

network.host: 172.18.4.21

#端口不能和SAS实例一样,master必须指定端口

http.port: 9201

transport.port: 9301

discovery.seed_hosts: ["es-1:9300", "es-2:9300", "es-3:9300"]

cluster.initial_master_nodes: ["es-1:9300", "es-2:9300","es-3:9300"]

http.cors.enabled: true

http.cors.allow-origin: "*"

node.master: false

node.ingest: false

node.data: true

node.max_local_storage_nodes: 2

#启动两个elasticsearch实例

sudo -u elasticsearch /opt/elasticsearch-SAS/bin/elasticsearch

sudo -u elasticsearch /opt/elasticsearch-SSD/bin/elasticsearch

三、es-data2部署双实例

1️⃣迁移索引:

条件有限,没有挂载新的硬盘用,创建目录代替

| 代码目录 | 数据目录 | 访问端口 | 运输端口 |

|---|---|---|---|

| /opt/elasticsearch-SAS | /data/SAS | 9200 | 9300 |

| /opt/elasticsearch-SSD | /data/SSD | 9201 | 9301 |

确保索引不在当前实例上

当前索引在es-data2,es-data3上

#当前索引在es-data2,es-data3上

qqq@qqq:~$ curl "http://172.18.3.138:9200/_cat/shards?h=n"

es-data3

es-data2

es-data3

es-data2

es-data3

es-data2

es-data3

es-data2

es-data3

es-data2

当前集群节点查看

#当前集群节点查看

qqq@qqq:~$ curl http://172.18.3.138:9200/_cat/nodes?v

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

172.18.4.20 22 92 0 0.00 0.01 0.05 i - es-ingest3

172.18.4.22 10 92 0 0.00 0.03 0.05 d - es-data2

172.18.4.23 8 92 0 0.00 0.01 0.05 d - es-data3

172.18.3.253 24 48 0 0.00 0.01 0.05 m * es-3

172.18.4.18 23 92 0 0.01 0.03 0.05 i - es-ingest1

172.18.4.21 10 93 23 0.07 0.56 0.50 d - es-data1-SAS

172.18.4.19 23 92 0 0.00 0.01 0.05 i - es-ingest2

172.18.4.21 7 93 7 0.07 0.56 0.50 d - es-data1-SSD

172.18.3.139 9 49 0 0.00 0.01 0.05 m - es-2

172.18.3.138 13 79 0 0.02 0.03 0.05 m - es-1

迁移索引到别的节点

qqq@qqq:~$ curl -X PUT "http://172.18.3.138:9200/*/_settings?pretty" -H 'Content-Type: application/json' -d'

{

"index.routing.allocation.include._ip": "172.18.4.21,172.18.4.23"

}'

{

"acknowledged" : true

}

查看当前索引位置

qqq@qqq:~$ curl "http://172.18.3.138:9200/_cat/shards?h=n"

es-data1-SSD

es-data1-SAS

es-data3

es-data1-SSD

es-data3

es-data1-SAS

es-data3

es-data1-SAS

es-data3

es-data1-SSD

2️⃣部署es-data2双实例

旧的SAS实例

#杀死旧进程

[root@es-data2 ~]# ps -ef | grep ela

root 18205 18186 0 01:42 pts/0 00:00:00 sudo -u elasticsearch /opt/elasticsearch/bin/elasticsearch

......

[root@es-data2 ~]# kill 18205

#改名字等

[root@es-data2 opt]# mv elasticsearch elasticsearch-SAS

[root@es-data2 opt]# mkdir -p /opt/logs/elasticsearch-SAS

[root@es-data2 opt]# chown elasticsearch.elasticsearch /opt/logs/elasticsearch-SAS -R

#SAS实例/opt/elasticsearch-SAS/config/elasticsearch.yml配置

[root@es-data2 opt]# grep -Ev '^$|^#' /opt/elasticsearch-SAS/config/elasticsearch.yml

cluster.name: 007-project

#名字要改

node.name: es-data2-SAS

path.data: /data/SAS

path.logs: /opt/logs/elasticsearch-SAS

network.host: 172.18.4.22

#端口加上。不写的话默认就是9200和9300,master节点要加上端口

http.port: 9200

transport.port: 9300

discovery.seed_hosts: ["es-1:9300", "es-2:9300", "es-3:9300"]

cluster.initial_master_nodes: ["es-1:9300", "es-2:9300","es-3:9300"]

http.cors.enabled: true

http.cors.allow-origin: "*"

node.master: false

node.ingest: false

node.data: true

新的SSD实例

注意节点名字,data位置,log位置,本结点访问端口,本结点运输端口,集群中其他主机及端口号,master节点端口号

[root@es-data2 opt]# tar xf software/elasticsearch-7.3.2-linux-x86_64.tar.gz -C /opt/

[root@es-data2 opt]# mv elasticsearch-7.3.2 elasticsearch-SSD

[root@es-data2 opt]# mkdir /data/SSD /opt/logs/elasticsearch-SSD

[root@es-data2 opt]# chown elasticsearch.elasticsearch /data/SSD /opt/logs/elasticsearch-SSD /opt/elasticsearch-SSD -R

#SSD实例/opt/elasticsearch-SAS/config/elasticsearch.yml配置

[root@es-data2 opt]# grep -Ev '^$|^#' /opt/elasticsearch-SSD/config/elasticsearch.yml

cluster.name: 007-project

node.name: es-data2-SSD

path.data: /data/SSD

path.logs: /opt/logs/elasticsearch-SSD

network.host: 172.18.4.22

http.port: 9201

transport.port: 9301

discovery.seed_hosts: ["es-1:9300", "es-2:9300", "es-3:9300"]

cluster.initial_master_nodes: ["es-1:9300", "es-2:9300","es-3:9300"]

http.cors.enabled: true

http.cors.allow-origin: "*"

node.master: false

node.ingest: false

node.data: true

启动两个实例:

#启动两个elasticsearch实例

sudo -u elasticsearch /opt/elasticsearch-SAS/bin/elasticsearch

sudo -u elasticsearch /opt/elasticsearch-SSD/bin/elasticsearch

四、es-data3部署双实例

1️⃣迁移索引:

条件有限,没有挂载新的硬盘用,创建目录代替

| 代码目录 | 数据目录 | 访问端口 | 运输端口 |

|---|---|---|---|

| /opt/elasticsearch-SAS | /data/SAS | 9200 | 9300 |

| /opt/elasticsearch-SSD | /data/SSD | 9201 | 9301 |

确保索引不在当前实例上

当前索引在es-data1,es-data3上

qqq@qqq:~$ curl "http://172.18.3.138:9200/_cat/shards?h=n"

es-data1-SSD

es-data1-SAS

es-data3

es-data1-SSD

es-data3

es-data1-SAS

es-data3

es-data1-SAS

es-data3

es-data1-SSD

当前集群节点查看

qqq@qqq:~$ curl http://172.18.3.138:9200/_cat/nodes?v

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

172.18.4.22 8 94 1 0.05 0.63 0.45 d - es-data2-SSD

172.18.3.253 14 48 0 0.08 0.04 0.05 m * es-3

172.18.4.20 14 92 0 0.00 0.01 0.05 i - es-ingest3

172.18.4.22 9 94 1 0.05 0.63 0.45 d - es-data2-SAS

172.18.4.21 10 94 0 0.14 0.11 0.19 d - es-data1-SSD

172.18.3.139 29 49 0 0.00 0.01 0.05 m - es-2

172.18.4.23 9 93 0 0.00 0.03 0.05 d - es-data3

172.18.3.138 30 79 0 0.00 0.02 0.05 m - es-1

172.18.4.19 14 93 0 0.00 0.01 0.05 i - es-ingest2

172.18.4.21 7 94 0 0.14 0.11 0.19 d - es-data1-SAS

172.18.4.18 15 92 0 0.00 0.01 0.05 i - es-ingest1

迁移索引到别的节点

qqq@qqq:~$ curl -X PUT "http://172.18.3.138:9200/*/_settings?pretty" -H 'Content-Type: application/json' -d'

{

"index.routing.allocation.include._ip": "172.18.4.21,172.18.4.22"

}'

{

"acknowledged" : true

}

查看当前索引位置

qqq@qqq:~$ curl "http://172.18.3.138:9200/_cat/shards?h=n"

es-data1-SSD

es-data1-SAS

es-data2-SSD

es-data1-SSD

es-data2-SSD

es-data1-SAS

es-data2-SAS

es-data1-SAS

es-data2-SAS

es-data1-SSD

2️⃣部署es-data2双实例

旧的SAS实例

#温柔地杀死旧进程

[root@es-data3 ~]# kill $(ps -ef | grep ela | grep root | grep -v grep | awk '{print $2}')

#改名字等

[root@es-data3 opt]# mv elasticsearch elasticsearch-SAS

[root@es-data3 opt]# mkdir -p /opt/logs/elasticsearch-SAS

[root@es-data3 opt]# chown elasticsearch.elasticsearch /opt/logs/elasticsearch-SAS -R

#SAS实例/opt/elasticsearch-SAS/config/elasticsearch.yml配置

#注意节点名字,data位置,log位置,本结点访问端口,本结点运输端口,集群中其他主机及端口号,master节点端口号

[root@es-data3 opt]# grep -Ev '^$|^#' /opt/elasticsearch-SAS/config/elasticsearch.yml

cluster.name: 007-project

node.name: es-data3-SAS

path.data: /data/SAS

path.logs: /opt/logs/elasticsearch-SAS

network.host: 172.18.4.23

http.port: 9200

transport.port: 9300

discovery.seed_hosts: ["es-1:9300", "es-2:9300", "es-3:9300"]

cluster.initial_master_nodes: ["es-1:9300", "es-2:9300","es-3:9300"]

http.cors.enabled: true

http.cors.allow-origin: "*"

node.master: false

node.ingest: false

node.data: true

新的SSD实例

注意节点名字,data位置,log位置,本结点访问端口,本结点运输端口,集群中其他主机及端口号,master节点端口号

[root@es-data3 opt]# tar xf software/elasticsearch-7.3.2-linux-x86_64.tar.gz -C /opt/

[root@es-data3 opt]# mv elasticsearch-7.3.2 elasticsearch-SSD

[root@es-data3 opt]# mkdir /data/SSD /opt/logs/elasticsearch-SSD

[root@es-data3 opt]# chown elasticsearch.elasticsearch /data/SSD /opt/logs/elasticsearch-SSD /opt/elasticsearch-SSD -R

[root@es-data3 opt]# cp /opt/elasticsearch-SAS/config/elasticsearch.yml /opt/elasticsearch-SSD/config/elasticsearch.yml

cp: overwrite ‘/opt/elasticsearch-SSD/config/elasticsearch.yml’? y

[root@es-data3 opt]# vim /opt/elasticsearch-SSD/config/elasticsearch.yml

[root@es-data3 opt]# grep -Ev '^$|^#' /opt/elasticsearch-SSD/config/elasticsearch.yml

cluster.name: 007-project

node.name: es-data3-SSD

path.data: /data/SSD

path.logs: /opt/logs/elasticsearch-SSD

network.host: 172.18.4.23

http.port: 9201

transport.port: 9301

discovery.seed_hosts: ["es-1:9300", "es-2:9300", "es-3:9300"]

cluster.initial_master_nodes: ["es-1:9300", "es-2:9300","es-3:9300"]

http.cors.enabled: true

http.cors.allow-origin: "*"

node.master: false

node.ingest: false

node.data: true

启动两个实例

#启动两个elasticsearch实例

sudo -u elasticsearch /opt/elasticsearch-SAS/bin/elasticsearch

sudo -u elasticsearch /opt/elasticsearch-SSD/bin/elasticsearch

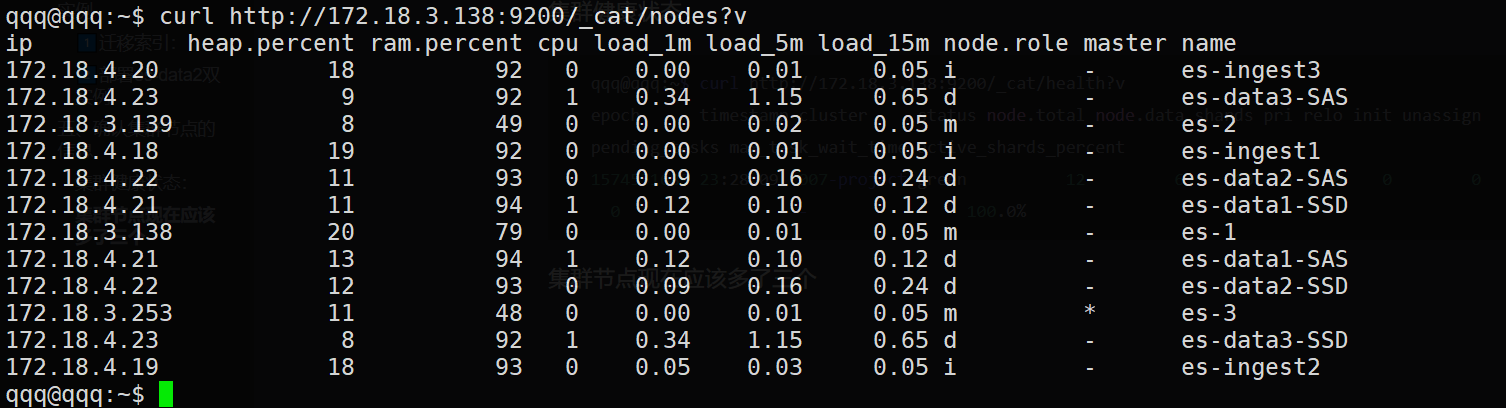

五、确认集群节点的信息

集群健康状态:

qqq@qqq:~$ curl http://172.18.3.138:9200/_cat/health?v

epoch timestamp cluster status node.total node.data shards pri relo init unassign pending_tasks max_task_wait_time active_shards_percent

1574551689 23:28:09 007-project green 12 6 10 5 0 0 0 0 - 100.0%

集群节点现在应该多了三个

qqq@qqq:~$ curl http://172.18.3.138:9200/_cat/nodes?v

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

172.18.4.20 18 92 0 0.00 0.01 0.05 i - es-ingest3

172.18.4.23 9 92 1 0.34 1.15 0.65 d - es-data3-SAS

172.18.3.139 8 49 0 0.00 0.02 0.05 m - es-2

172.18.4.18 19 92 0 0.00 0.01 0.05 i - es-ingest1

172.18.4.22 11 93 0 0.09 0.16 0.24 d - es-data2-SAS

172.18.4.21 11 94 1 0.12 0.10 0.12 d - es-data1-SSD

172.18.3.138 20 79 0 0.00 0.01 0.05 m - es-1

172.18.4.21 13 94 1 0.12 0.10 0.12 d - es-data1-SAS

172.18.4.22 12 93 0 0.09 0.16 0.24 d - es-data2-SSD

172.18.3.253 11 48 0 0.00 0.01 0.05 m * es-3

172.18.4.23 8 92 1 0.34 1.15 0.65 d - es-data3-SSD

172.18.4.19 18 93 0 0.05 0.03 0.05 i - es-ingest2

当前索引位置

qqq@qqq:~$ curl "http://172.18.3.138:9200/_cat/shards?h=n"

es-data1-SSD

es-data1-SAS

es-data2-SSD

es-data1-SSD

es-data2-SSD

es-data1-SAS

es-data2-SAS

es-data1-SAS

es-data2-SAS

es-data1-SSD

使用下面命令将索引迁移到每个data节点

qqq@qqq:~$ curl -X PUT "http://172.18.3.138:9200/*/_settings?pretty" -H 'Content-Type: application/json' -d'

{

"index.routing.allocation.include._ip": "172.18.4.21,172.18.4.22,172.18.4.23"

}'

{

"acknowledged" : true

}

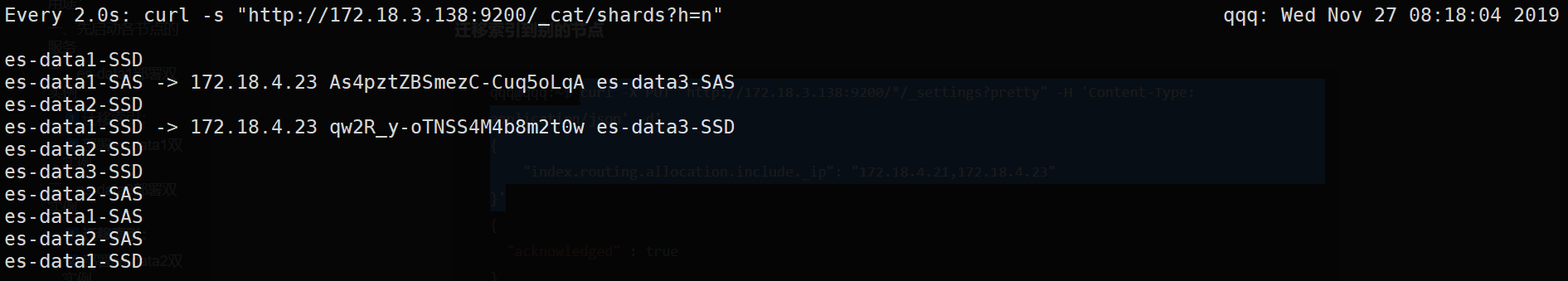

六、测试

将所有索引迁移到SSD硬盘上

qqq@qqq:~$ curl -X PUT "http://172.18.3.138:9200/*/_settings?pretty" -H 'Content-Type: application/json' -d'

{

"index.routing.allocation.include._host_ip": "",

"index.routing.allocation.include._host": "",

"index.routing.allocation.include._name": "",

"index.routing.allocation.include._ip": "",

"index.routing.allocation.require._name": "*-SSD"

}'

{

"acknowledged" : true

}

确认所有索引全在SSD硬盘上

qqq@qqq:~$ curl "http://172.18.3.138:9200/_cat/shards?h=n"

es-data2-SSD

es-data1-SSD

es-data2-SSD

es-data3-SSD

es-data2-SSD

es-data3-SSD

es-data1-SSD

es-data3-SSD

es-data1-SSD

es-data3-SSD

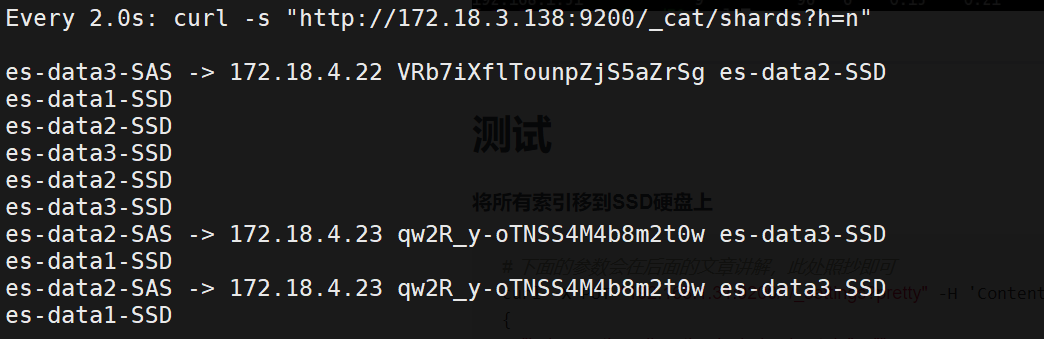

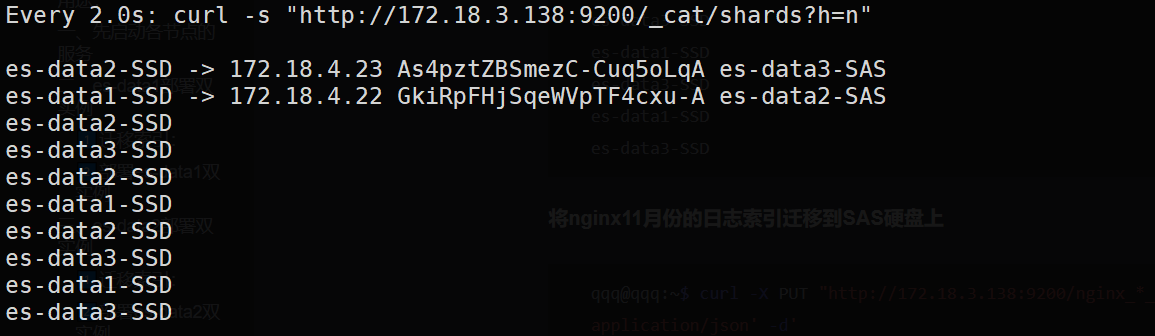

将nginx11月份的日志索引迁移到SAS硬盘上

qqq@qqq:~$ curl -X PUT "http://172.18.3.138:9200/nginx_*_2019.11/_settings?pretty" -H 'Content-Type: application/json' -d'

{

"index.routing.allocation.include._host_ip": "",

"index.routing.allocation.include._host": "",

"index.routing.allocation.include._name": "",

"index.routing.allocation.include._ip": "",

"index.routing.allocation.require._name": "*-SAS"

}'

{

"acknowledged" : true

}

qqq@qqq:~$ curl "http://172.18.3.138:9200/_cat/shards"

nginx_access_2019.11 0 r STARTED 9 75.2kb 172.18.4.23 es-data3-SAS

nginx_access_2019.11 0 p STARTED 9 75.2kb 172.18.4.22 es-data2-SAS

kibana_sample_data_logs 0 p STARTED 14074 11.4mb 172.18.4.22 es-data2-SSD

kibana_sample_data_logs 0 r STARTED 14074 11.4mb 172.18.4.23 es-data3-SSD

filebeat-7.3.2-2019.11.17-000001 0 p STARTED 0 283b 172.18.4.22 es-data2-SSD

filebeat-7.3.2-2019.11.17-000001 0 r STARTED 0 283b 172.18.4.21 es-data1-SSD

.kibana_task_manager 0 p STARTED 2 128.2kb 172.18.4.22 es-data2-SSD

.kibana_task_manager 0 r STARTED 2 128.2kb 172.18.4.23 es-data3-SSD

.kibana_1 0 r STARTED 43 223.3kb 172.18.4.21 es-data1-SSD

.kibana_1 0 p STARTED 43 338.9kb 172.18.4.23 es-data3-SSD